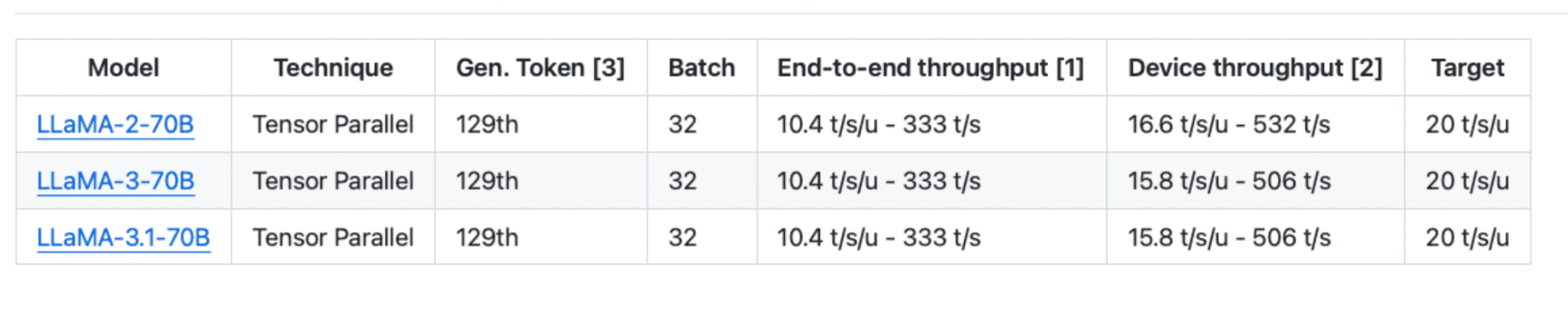

Llama-3.1 Announcement

We are happy to announce that we have brought up support for Llama-3.1-70B inference on Tenstorrent’s 8-chip systems, the TT-QuietBox and the TT-LoudBox.

The source code for Llama-3.1-70B and other models that are supported is on our GitHub. We have also merged support for Llama-3.1-8B, running on our single-chip n150 card.

Implementation highlights:

- Fractured with 8-way tensor parallelism

- Uses FlashAttention and FlashDecode

- Uses Mixed BF16, BFP8, and BFP4 precision

- Performance was measured in eager mode with tracing disabled

We are working on optimizations which will get us to our target of 20 tokens/second/user. Buy our 8-chip systems (TT-QuietBox and TT-LoudBox) to try Llama-3.1-70B at home on Tenstorrent hardware!

Other articles

Tenstorrent and Infinia Technologies Partner to Build Sovereign AI Infrastructure

Signing during Abu Dhabi Finance Week formalizes collaboration between Tenstorrent and Infinia on sovereign AI systems

Tenstorrent Announces Participation in CHASSIS Program

Tenstorrent Joins Initiative for Automotive Chiplet Technology

Tenstorrent Unveils First Gen Compact AI Accelerator Device

Tenstorrent unveiled its first-generation compact AI accelerator device designed in partnership with Razer™ today at CES 2026