The Ojo-Yoshida Report | With Nvidia, It's Always Take It or Leave It

What’s at stake:

To Cuda or not to Cuda revives the decades-old debate over licensed vs. open-source software. But at stake is the safety, for those who choose to develop their own AV software stack outside the context of a safety-certified Nvidia’s SoC and Drive OS. The onus of qualification is now placed on the carmakers’ system integrators. It’s “a huge undertaking,” to say the least, according to a safety expert.

In designing next-generation highly automated vehicles, carmakers’ top priority has to be the right advanced automotive SoC. OEMs need a highly integrated chip with enough processing capability to power neural networks, support sensor fusion and manage central engine functions in new ADAS models.

Whether to make their own or buy an off-the-shelf chip is another important decision.

Developing in-house hardware is a bold move. But why not? Tesla designed its “Full Self-Driving (FSD)” chip for its Hardware 3.0, after the company developed Hardware 1.0 based on Mobileye’s SoC, and Hardware 2.0 by using Nvidia Drive PX 2.

Too much hardware talk overlooks the consequential role of software in carmakers’ overall system design. Who designs the AV stack? How do carmakers test it? If they opt for a complete AV stack provided by a chip company like Nvidia, could they modify it for the sake of differentiation?

Tenstorrent over Nvidia?

In rolling out its newest AI processor engine, Tenstorrent told us it is gaining auto industry mindshare, signing up as yet-to-be-named carmakers as customers.

Why would an OEM prefer Tenstorrent to better known AV SoC solutions from, say, Nvidia?

Tenstorrent’s answer is the company’s open-source strategy applied to its software and RISC-V cores. Tenstorrrent explained that many customers are finding that using Cuda is not an option, when some of the automotive applications require 100% code inspection and testing. They can’t do that because Cuda libraries are proprietary.

Not exactly fully convinced, the Ojo-Yoshida Report went back to Tenstorrent and asked for examples that illustrate serious software challenges facing car OEMs when designing highly automated ADAS vehicles on a proprietary hardware and software platform.

The problem was, and still is today, that much of Cuda’s cuDNN libraries are proprietary.

A good example is what Tesla went through, when Jim Keller, now CEO of Tenstorrent, worked there between 2016 and 2018.

What Tesla went through with Nvidia

Elon Musk hired Keller, a legendary CPU designer, during Tesla’s transition from Hardware 2.0 to Hardware 3.0. With Pete Bannon, Keller led the FSD chip project starting in 2016. Tesla, by that time, decided to develop its own chip, as they claimed then they saw no alternative solution that fits their problem of addressing autonomous driving.

Responding to our email, Keller said that the trouble began when Tesla forsook Nvidia’s software, while Tesla was still using Nvidia’s chip for Hardware 2.0. “Tesla made the decision not to use Nvidia’s software.”

As Keller explained, “The problem was, and still is today, that much of Cuda’s cuDNN (Cuda Deep Neural Network library) libraries are proprietary. When you are using proprietary software, even when you test it you aren’t always sure what it is going to do when deployed,” noted Keller.

So, Tesla wrote its own code.

Keller explained, “In addition to the safety concerns, it was much more straightforward to debug Tesla’s own code than to guess what was happening in the proprietary libraries.”

Keller concluded, “In the end, Tesla’s software was known, testable, and ran faster. It was a smart decision to write it ourselves.”

Keller also noted that Nvidia’s solutions are “expensive and proprietary,” and even if OEMs build their own stack or wanted to add their own flavor to Nvidia’s AV software stack, they’d end up “having to use some Nvidia software.” This is because there is no way for them to inspect the code on Nvidia’s SoC.

Safety argument

Nvidia’s DRIVE OS is not free. It is “licensed” to all customers who purchase NVIDIA DRIVE Orin SoCs, according to Nvidia. It comes in safety-certified and non-safety-related variants (built on Linux, which itself is not now safety certified), a company spokesperson explained. “Our safety-certified software comes with a list of usage rules which customers must follow to remain certified. As a result, customers do not modify the system- level components we provide.”

Despite the Nvidia taboo against modifying its software, Nvidia is signing up some leading OEMs.

Why?

One explanation is safety.

To its credit, Nvidia is keenly aware that it must provide customers with the functional safety standard — ISO26262 — certified software. The company spokesperson told us that Nvidia Drive OS 6.0 was recently “internally certified to 26262:2018, in automotive for use in applications up to ASIL D.” Additionally, “We’re in the process of safety certifying DRIVE OS 6.0 with TÜV SÜD, an independent, accredited assessor, as we did with DRIVE OS 5.2.”

Asked if the ASIL-D certification for Nvidia DRIVE OS actually comforts carmakers when it comes to autonomous driving, Tenstorrent’s Keller said, “We don’t know. It was very clear when ASIL-D was certifying simple components what the value was, but when building a very complex system like autonomous driving we are less certain on the value of ASIL-D certification and what the certification ensures.”

Open source vs. licensed software

The Ojo-Yoshida Report discussed the matter with PrecisionPro Engineering LLC, a new consultancy consisting of two functional safety experts, Brian Cano, Nandip Gohoel, and a functional safety manager, Keven Connelly.

This debate around Cuda “basically comes down to the long-running conflict between open-source and licensed software,” observed Brian Cano.

On one hand, licensed software is expensive but offers ongoing support and improvements. “Because the creator controls what users can and cannot do with the software, it is less flexible. It contributes in mitigating security threats,” he explained.

Open-source software is freely available for use, modification, and distribution. A user can add features and modify the source code to fit individual needs. “It has a high degree of flexibility but also has security flaws and the possibility of backdoor vulnerabilities. The community is the only source of support, and occasionally it lags behind the most recent developments in technology,” Cano added.

Beyond the familiar clash of open source vs. proprietary software, there are concerns among automakers primarily interested in running their own software stack on top of Nvidia’s safety platform, or modifying a stack they licensed from Nvidia.

According to the two functional safety experts at PrecisionPro Engineering, it is not unreasonable for OEMs to want to” freely alter the low-level driver code in order to improve performance, have more control over GPU resources, decrease latency, lower development costs, etc. while developing their own AV stack.”

But the black-box nature of the Cuda libraries and closed-source code are stacked against the OEMs.

Availability of source code?

Nvidia’s Cuda libraries source code is not made available to its customers, largely and logically because Nvidia’s drivers are its competitive advantage. Driver and hardware are closely related. Making the driver open source would “reveal the SoC’s fundamental architecture,” according to PrecisionPro Engineerig’s functional safety experts.

Nvidia’s spokesperson made clear, “It is common for companies to keep source code of low-level libraries private. This decision does not affect the safety or robustness of the software. What’s crucial is that users have tools, like safety manuals, to understand any remaining risks and their own responsibilities.”

The flip side of the argument, however, is that the onus of certifying safety is on OEMs and Tier 1s.

Another safety expert we consulted told us, “When a software component is being used outside of the context where it has been developed or safety certified, the system integrator has to perform what’s called a qualification.”

He explained, “Essentially, the system integrator has to map their own set of requirements onto the software component and perform their own testing to ensure that their requirements are all met and that the software is bug-free in their context. The level of assurance required is up to the system integrator (and their safety assessor).”

In short, qualification can be a “huge undertaking.”

Charles Macfarlane, chief business officer, at Codeplay Software Ltd., agreed. “Ultimately yes, OEMs/T1s will need to do full verification of code if Nvidia does not provide software analysis tools on all code.”

Some carmakers might seek relief by labeling their vehicles SAE Level 2, making a software failure and subsequent crash the [human] driver’s fault. This, obviously, is not a recommended option.

Hurdles

While qualification presents a big hurdle, OEMs have more to worry about. Other drawbacks to proprietary software development, besides issues like licensed binaries and proprietary drivers include the “danger” of Nvidia suddenly adding new features or upgrades.

This could trigger disruptive adjustments or revisions to the automaker’s application stack.

Obviously, OEMs prefer to avoid vendor lock-in. Nor do they want to be beholden to Nvidia forever, because Nvidia may suddenly decide to change licensing terms or prices.

Another issue is that the Nvidia SoC (ASIL D) offers parallel/heterogeneous hardware. Due to AoU (Assumptions of Use), design methodologies and automotive software stacks can only get more complex.

They must be heavily modified — and to some extent re-designed — to cope with next-generation NVIDIA platforms, explained experts at PrecisionPro Engineerig. This, in their view, will further “increase architectural complexity” to Nvidia’s SoC, making it harder [for developers] to write efficient code.

The industry observers confirm what Keller described as his experience of using Nvidia’s chip at Tesla.

They noted that without total control over GPU hardware resources, OEMs won’t be able to use Nvidia’s SoC to create their own full AV stack.

Open-source software

Is ‘Open Source’ the answer? If not, what is?

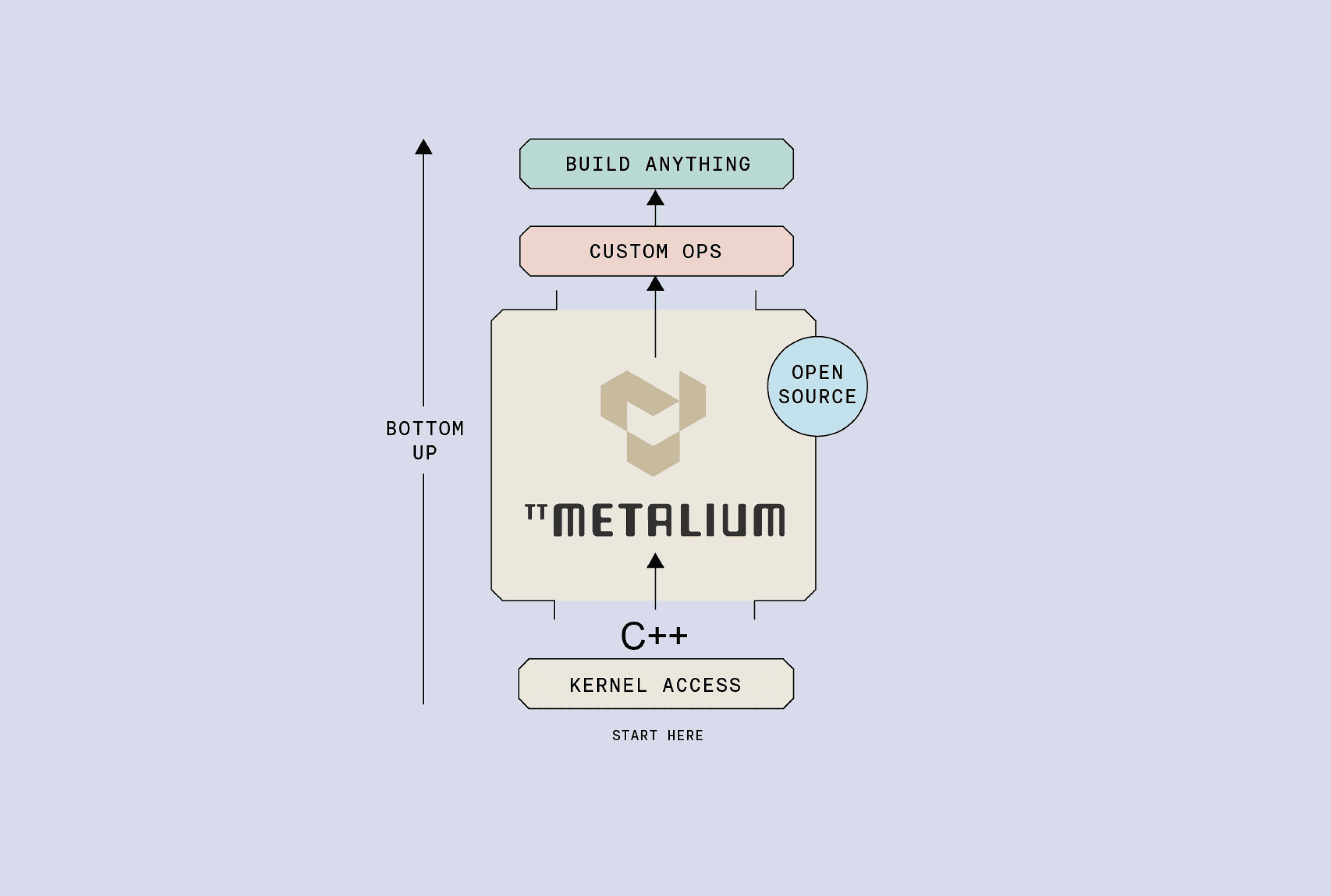

In Tenstorrent’s case, the company has open sourced what it calls “TT-Metalium,” a kernel-level software stack.

The low-level software platform is for a heterogeneous collection of CPUs and Tenstorrent devices. Users get direct access to the RISC-V processors, NoC (Network-on-Chip), and Matrix and Vector engines within

the Tensix Core, according to the company. Tenstorrent’s strategy is to attract AI developers by going beyond better hardware to offer an easy-to-use open-source software stack.

Talking with the Ojo-Yoshida Report, Jasmina Vasiljevic, a Tenstorrent senior fellow, described TT- Metalium as “exactly comparable to Nvidia’s Cuda, or OpenCL, in terms of the abstraction level.” However, Tenstorrent did not design TT-Metalium to replace Nvidia’s Cuda. “It is a low-level programming model that enables developers to command program kernels on Tenstorrent hardware and get full access to all of the company’s hardware,” she explained.

Tenstorrent is targeting AI and High-Performance Computing (HPC) developers who want to write highly efficient code with unrestricted access to the hardware, the company explained. Clearly, “open source” has been one of Keller’s guiding principles at Tenstorrent.

That said, TT-Metalium isn’t a higher level open-source software, designed to free system designers from using one AI accelerator then switching to another more easily.

Meanwhile, according to Codeplay’s Macfarlane, UXL (Unified Acceleration) Foundation — built on open source and based on open standards — is seeking to define a version of SYCL for automotive and other safety critical (SC) solutions. SYCL is a higher-level programming model to improve programming productivity on various hardware accelerators.

Such an open-source software activity could eventually satisfy system vendors (i.e. carmakers) who want the freedom to innovate and program software without getting tied to specific hardware and software platforms. But Macfarlane cautioned against the overly simplistic optimism that assumes such an open- source software will be the solution to all.

While declining to comment on the working group’s schedule, Macfarlane noted that UXL-SC, the safety critical-working group is “making strides towards addressing automotive.” He reported that so far, Denso and Mercedes have joined, with prospects for an automotive SoC vendor.

Bottom line:

Carmakers have considerable freedom to develop Cuda applications as long as they adhere to Nvidia’s APIs and use Nvidia’s libraries. But without full control over Nvidia’s GPU hardware resources, OEMs can’t use Nvidia’s SoC to create their own AV stacks.

This article first appeared on the Ojo-Yoshida Report.