Vision

Learnings, news, and updates from the Tenstorrent universe.

Newsroom

Tenstorrent and Movellus Form Strategic Engagement for Next-Generation Chiplet-Based AI and HPC Solutions

Enabling Cross-Foundry IP for Power and Performance Optimization

Research

Attention in SRAM on Tenstorrent Grayskull

When implementations of the Transformer's self-attention layer utilize SRAM instead of DRAM, they can achieve significant speedups.

Research

Tenstorrent is Continuing its Contributions to the RISC-V Open Source Ecosystem

Today we are pleased to announce the release of our RISC-V Architectural Compatibility Suite, now available in our GitHub repository.

Newsroom

The Ojo-Yoshida Report | With Nvidia, It's Always Take It or Leave It

With its automotive SoC, Nvidia promises carmakers a licensable ASIL-D certified software stack. But OEMs face the dilemma of either getting locked into Nvidia or designing alternative stacks of their own with no adequate support from Nvidia.

Resources

AI Hardware w/ Jim Keller

Listen to Tenstorrent CEO Jim Keller discuss why AI models are graphs and how we built our software and hardware to give you a fundamental advantage at this year's AI Hardware Summit.

Designing in 2023: 10 Problems to Solve w/ Jim Keller

Tenstorrent President and CTO Jim Keller talked about designing for the future at TSMC's 2022 Open Innovation Platform Ecosystem Forum.

The Exciting Evolution of MLOps w/ Thaddeus Fortenberry

Tenstorrent Senior Principal Engineer Thaddeus Fortenberry discusses innovations in hardware through the lens of MLOps and model-based services

About Tenstorrent

Tenstorrent builds computers for artificial intelligence.

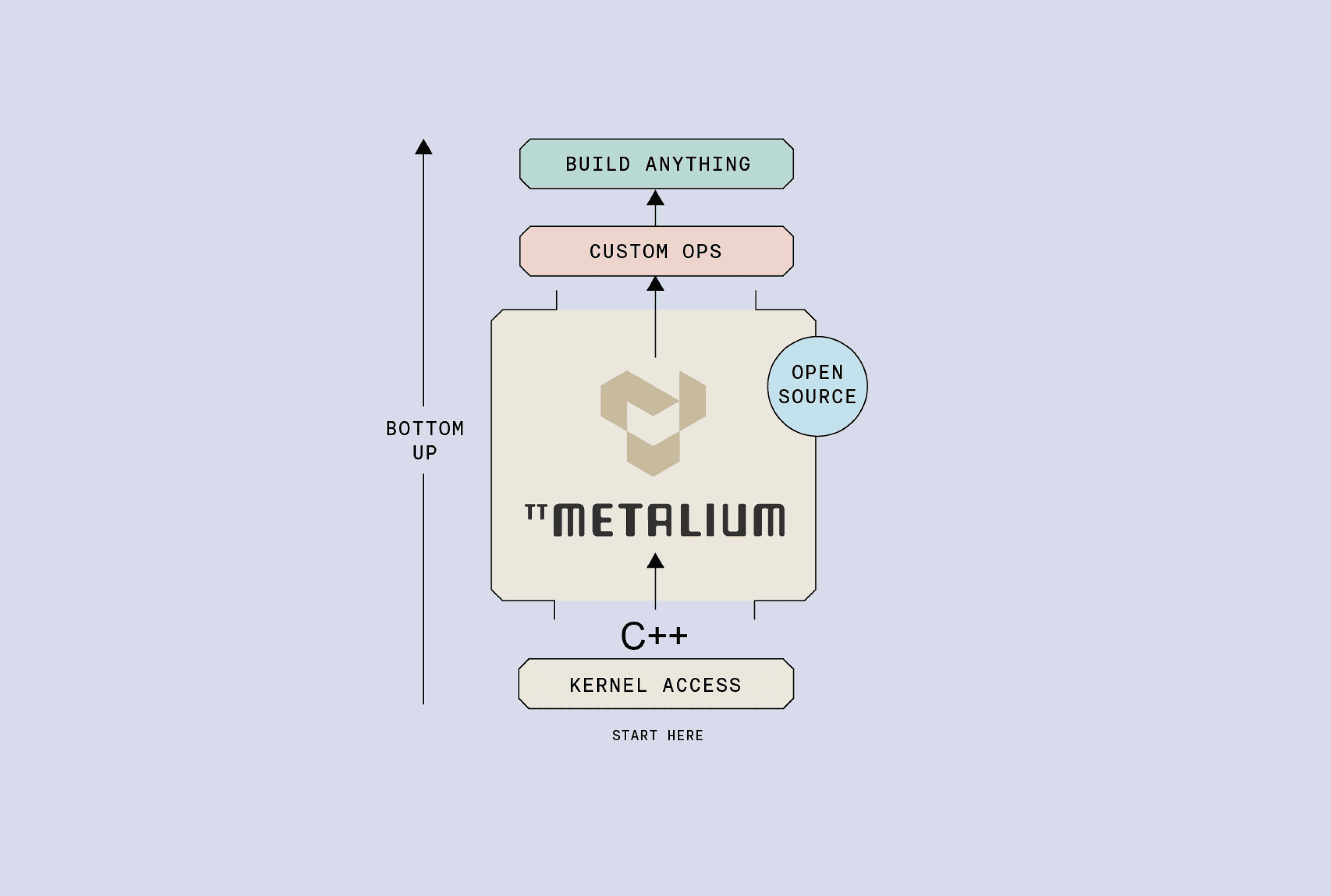

We design AI Graph Processors, high-performance RISC-V CPUs, and configurable chiplets that run our robust software stack.

Tenstorrent Team

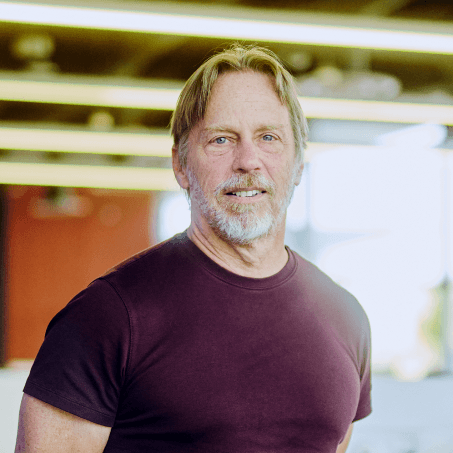

Jim Keller

Chief Executive Officer

Jim Keller is CEO of Tenstorrent and a veteran hardware engineer. Prior to joining Tenstorrent, he served two years as Senior Vice President of Intel's Silicon Engineering Group. He has held roles as Tesla's Vice President of Auto...

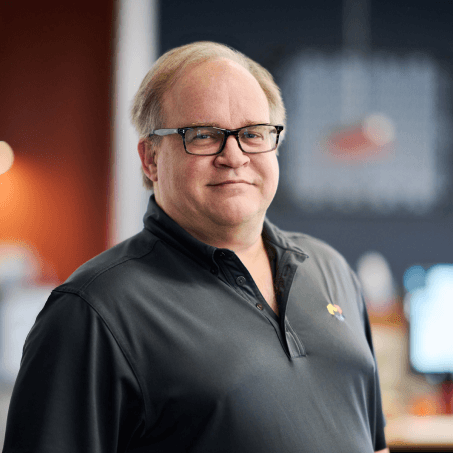

Keith Witek

Chief Operating Officer

Keith Witek is currently the Chief Operating Officer of Tenstorrent leading operations, legal, HR, Finance, facilities, compliance, licensing, and other functions. Prior to joining Tenstorrent, Keith led all aspects of Strategic A...

Christine Blizzard

Chief Marketing Officer

Christine Blizzard is the Chief Marketing Officer of Tenstorrent. Prior to joining Tenstorrent she founded y3k, a brand marketing studio with a focus on the intersection of cutting edge technology and culture. y3k clients include ...

David Bennett

Chief Customer Officer

David Bennett is the Chief Customer Officer (CCO) of Tenstorrent Inc., a unicorn start-up that builds world-class AI, Machine Learning, and RISC-V technology. Since joining Tenstorrent in June of 2022, David has already led Tensto...

Stan Sokorac

Senior Fellow, AI Hardware & Software

Stan Sokorac leads the development of Tenstorrent’s high-performance AI processing unit, Tensix, which is the core IP component in Tenstorrent’s AI accelerators. He is also the lead for Tenstorrent’s open-source AI compiler, Buda....

Jasmina Vasiljevic

Fellow, ML, Compilers and Models

Jasmina Vasiljevic, a Senior Fellow at Tenstorrent, is at the forefront of the company’s mission to address the open-source compute demands for software 2.0. She leads the Pathfinding team, a group dedicated to exploring and devel...

Olof Johansson

VP of Operating Systems and Infrastructure Software

Olof Johansson is VP of Operating Systems and Infrastructure Software at Tenstorrent. Prior to Tenstorrent, Olof led software teams in Meta’s Infrastructure Hardware group, including ASIC FW/SW, OpenBMC and server enablement. Befo...

Julie Mathis

Senior Director of Product

Julie Mathis is the Senior Director of Product at Tenstorrent Inc. Tenstorrent is a North America-based unicorn startup that builds world-class AI, Machine Learning, and RISC-V hardware and software technology. She joined Tenstorr...

Dan Bailey

Senior Fellow, Lead of Physical Engineering

Dan Bailey leads Tenstorrent’s Physical Engineering team. Dan is well known in his field for physical design leadership and specifically for his designs of high-performance flip flops and new methodologies for global clock distrib...

Bob Grim

VP of Corporate Communications and Investor Relations

Bob Grim is the Vice President of Corporate Communications and Investor Relations at Tenstorrent, and currently co-leads fundraising activities as well as manages key sales accounts. Prior to this role, Bob was a founder and Chief...

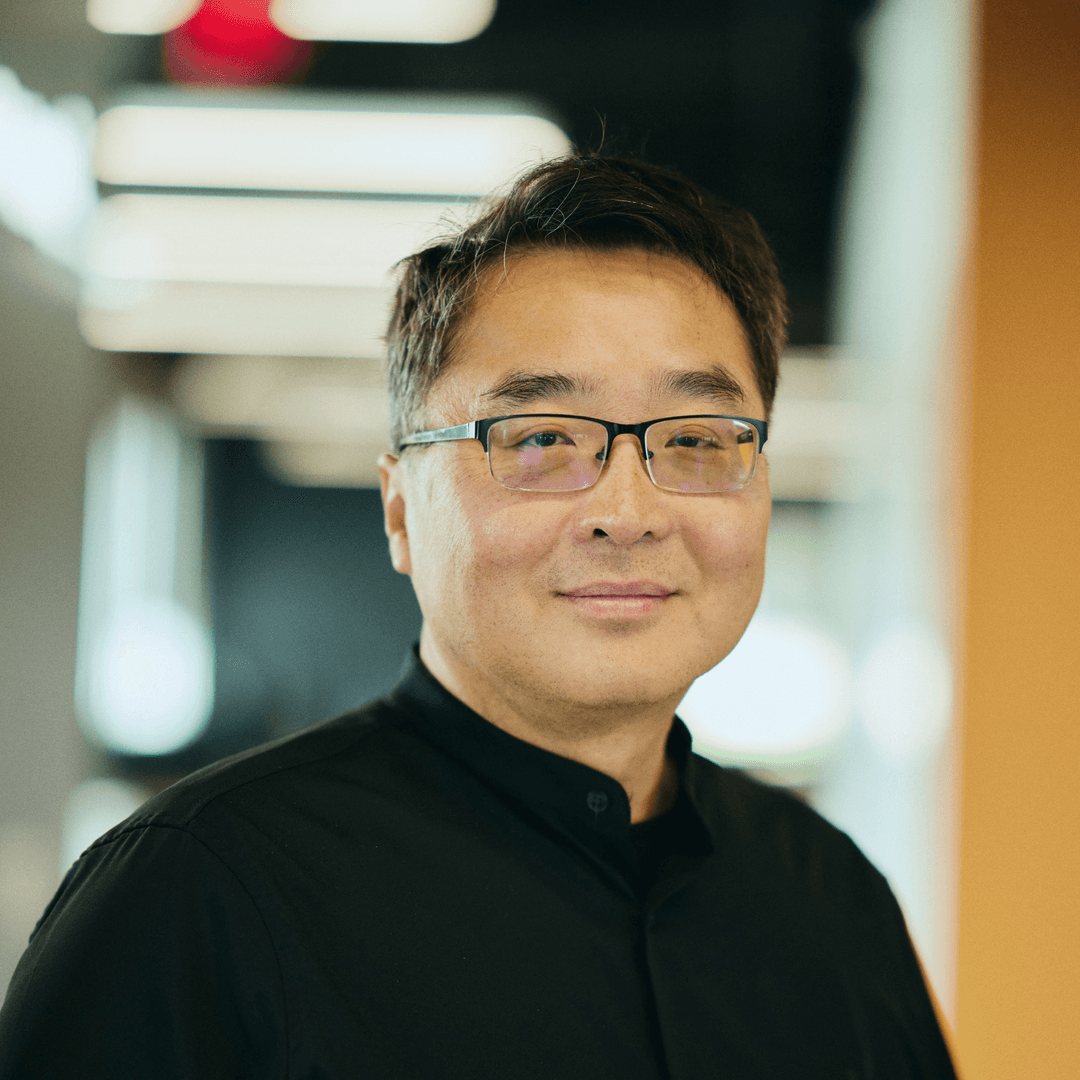

Wei-Han Lien

Chief CPU Architect and Senior Fellow

Wei-Han Lien is a Chief Architect and Senior Fellow in Machine Learning hardware architecture. He is currently leading an architecture team in defining a high-performance RISC-V CPU, fabric, system caching, and high-performance me...

Tanya Bischoff

Senior Director of Operations and Strategic Partnerships

Tanya Bischoff is Senior Director of Supply Chain and Operations at Tenstorrent, with nearly two decades of experience shaping and optimizing supply chain operations. Recognized as one of Canada's top women to watch in the supply ...